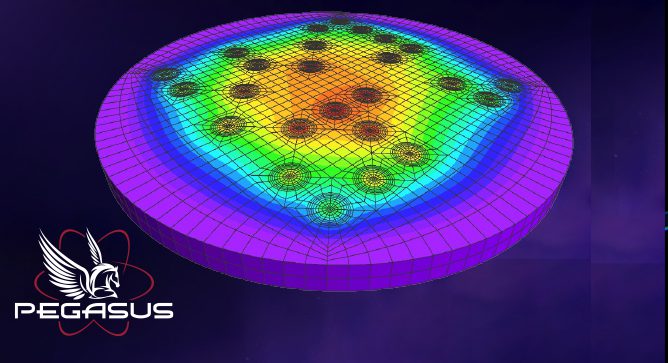

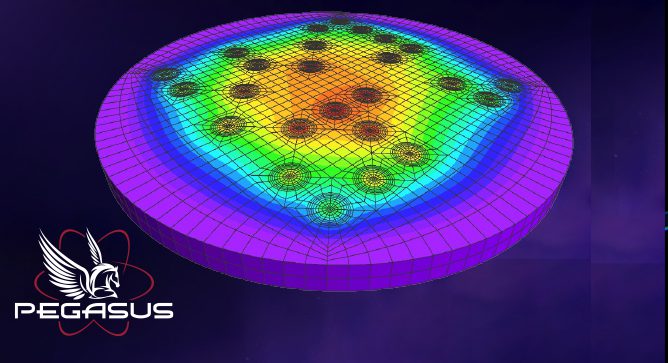

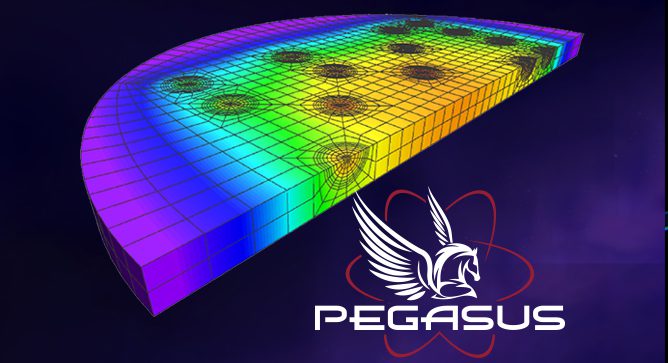

PEGASUSTM Nuclear Fuel Code

ADVANCED FUEL MODELING DEVELOPMENT STATUS By: Bill Lyon INTRODUCTION The PEGASUS nuclear fuel behavior code

ADVANCED FUEL MODELING DEVELOPMENT STATUS By: Bill Lyon INTRODUCTION The PEGASUS nuclear fuel behavior code

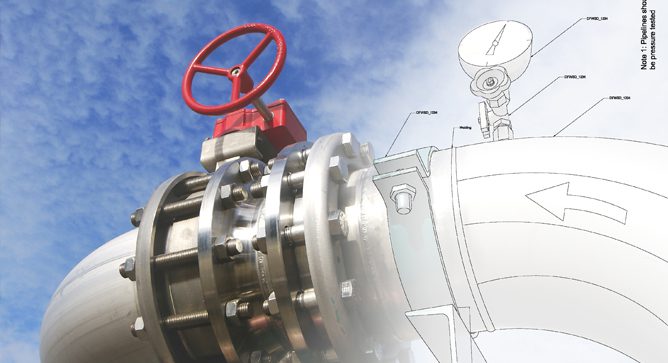

PREPARING CLIENTS TO MEET NEW PIPELINE AND SAFETY REGULATION By: Bruce Paskett and Erica Rutledge

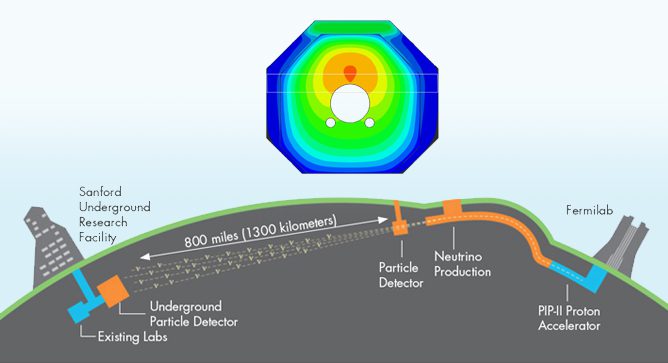

A CASE STUDY FROM THE FERMILAB LONG BASELINE FACILITY By: Keith Kubischta and Andy Coughlin,

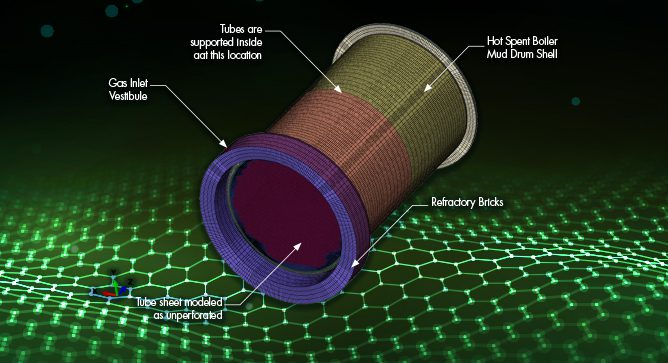

By: Kannan Subramanian, PhD, PE, FASME & Dan Parker, PE BACKGROUND The hot section of

OIL & GAS SAFETY & RELIABILITY By: Scott Riccardella, Owen Malinowski & Dr. Pete Riccardella

DEVELOPMENT FOR TRISO FUEL AND ADVANCED REACTOR APPLICATIONS By: Bill Lyon The Pegasus code allows

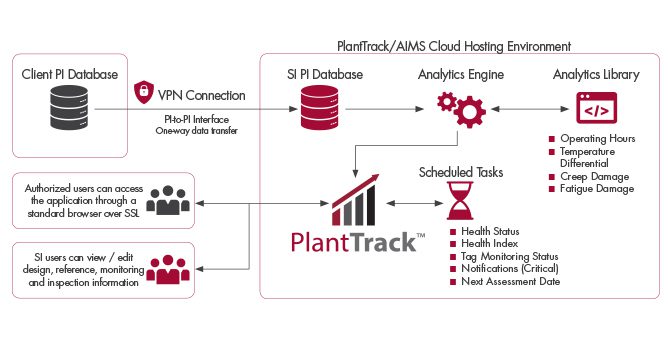

A CASE STUDY ON IMPLEMENTATION AT A 3X1 COMBINED CYCLE FACILITY (ARTICLE 1 OF 3)

POWER PLANT ASSET MANAGEMENT SI’s technology differs from most systems by focusing on MODELING OF

January 31ST – February 2ND 2023 COURSE DESCRIPTION Our High Energy Piping (HEP) Seminar for

By: Clark McDonald In the world of metallurgical failure analysis, areas of interest on broken